- Moonshot Minute

- Posts

- This Tiny AI Play Just Shook the Market

This Tiny AI Play Just Shook the Market

Most Asymmetric AI Setup We’ve Seen in 2025

Over the past few months, my team and I tracked an AI trade that’s quietly shaping up to be one of the most compelling asymmetric opportunities I've seen this year.

It has been flying under the radar, ignored by the media, overlooked by most funds, and completely misunderstood by the market.

But we believe it has the potential to be the kind of trade you look back on years from now and say, "That was the one I wish I hadn’t missed."

No, it's not one of the usual AI suspects like NVIDIA, AMD, or Cerebras.

It's also not a hyperscale cloud platform or a GPU juggernaut.

But it could be one of the most asymmetric bets in the entire AI infrastructure ecosystem.

It sits at the intersection of two explosive themes: the AI arms race and the optical networking revolution.

Two topics I've been covering in past Moonshot Minute essays.

This small-cap company isn't trying to win on raw compute, it's focused on a different battlefield...

The invisible, light-speed plumbing that connects those compute engines together.

It aims to solve the one bottleneck that could break the AI buildout, which is how to move massive amounts of data instantly between clusters of AI chips.

That bottleneck isn’t compute.

It’s the physical connection needed between these compute clusters.

The Hidden Crisis in AI Infrastructure

When people talk about scaling AI, they often refer to GPUs.

But here’s the thing few are saying out loud:

You could have 10,000 NVIDIA H100 GPUs in a data center and still not reach full performance.

Why?

Because if those chips can’t talk to each other fast, clean, and with near-zero latency, you’re just stacking silicon in a warehouse.

As I’ve said before, AI doesn't scale on chips. It scales on bandwidth.

And the industry knows it. Quietly, behind the scenes, AI infrastructure is being rebuilt around light.

Photons are replacing electrons.

Optical engines are replacing copper lanes.

Wafer-scale integration is replacing labor-intensive fiber assembly.

And somewhere in the middle of all that, between the switches, the lasers, and the silicon, is a small-cap company with a monstrous idea.

We believe it has a shot to become one of the most important "picks-and-shovels" providers in the next wave of AI expansion.

It’s not profitable yet because it's in the pre-revenue stage.

It’s not mainstream.

But the risk/reward? Unreasonably asymmetric.

And earlier this month, it felt like we weren’t the only ones who noticed…

The Breakout That Shook the Tree

After consolidating for weeks, this stock exploded.

In less than two weeks, it surged over 50% at its peak, driven by:

A new AI hardware innovation award for its optical engine platform

News that it had shipped all of its 800G samples to customers, with 1.6T on the way

A new manufacturing partnership in Malaysia to prep for high-volume orders

A $25M financing at $5 per unit (common + warrant) taken entirely by a single institutional investor

And the addition of a semiconductor industry veteran to its board

The market woke up.

Volume surged.

Momentum traders jumped in.

The stock hit a new 52-week high.

And then, as always happens in speculative setups, came the pullback.

Over the past few days, it’s cooled off. Nothing broke. No bad news. Just gravity doing its thing.

I'm now watching from the sidelines. Letting it breathe. Waiting to see if support holds.

But make no mistake: my excitement hasn’t dimmed.

If anything, it’s grown.

Because when you zoom out, the story here is almost too good.

The Bottleneck That No One Is Solving Except Maybe Them

AI inference and training workloads are growing exponentially.

That means more and more data is being processed by AI systems during the initial learning phase (training), where models crunch huge datasets to learn patterns, and during live usage (inference), where those models respond to prompts or make decisions in real time.

The scale and complexity of both processes are skyrocketing as AI becomes embedded in everything from chatbots to autonomous systems.

But they aren’t growing on a single chip.

They’re growing on distributed compute networks, which consist of racks of GPUs connected by switches and communicating across datacenter clusters.

It’s something that looks like this:

The thing is, that system is under siege.

Power budgets are exploding. Cooling costs are rising. Bandwidth demand is breaking copper. And the next generation of transceivers designed for terabit-scale data movement are straining the limits of legacy optical infrastructure.

And then, a company, no one has ever heard of, has a radical idea.

Instead of building optical engines the old-fashioned way, using a chaotic mess of components, hand-aligned in precision labs, what if you built them like semiconductors?

Laser, modulator, detector, and driver… In essence, all the optical layers integrated at wafer scale in a single stackable module.

That’s what this company has done. They’ve developed a photonic integration platform that’s:

Cheaper to manufacture

Faster to assemble

More thermally efficient

Easier to scale

And as if that weren’t enough… Unlike lab demos, this thing works.

In fact, it’s already been shown in modules by customers at OFC (the Optical Fiber Communication conference), with demos from Luxshare, ADVA, and others.

This team is already shipping next-gen optical engines to design partners.

In other words, it’s commercial.

The Partners and the Punchline

What makes this story even crazier is who they’re quietly working with.

I won't directly name their partners here, as I reserve that information for Premium Members. Until I’m ready to share this play with Premium, I don’t want to give away too much.

But what I can say is we’ve seen confirmed collaborations with:

A leading multinational electronics company with a decades-long history in telecom and industrial systems

An up-and-coming AI hardware startup integrating advanced photonics into its compute fabric

Well-established module makers with direct exposure to hyperscaler demand

One of Apple’s top suppliers

And several unnamed hyperscaler-adjacent module makers in China and Korea

In short, this company isn’t selling directly to the cloud giants; instead, it’s doing something even bigger and better: it’s supplying the companies that supply them.

It’s a behind-the-scenes player, plugged into the critical hardware layers that make AI infrastructure work at scale.

That’s a big deal.

Because if even one of those designs gets selected for volume deployment, this little company could go from “pre-revenue” to “can’t make them fast enough.”

They’ve already secured manufacturing in a neutral, strategically chosen location, avoiding entirely political friction zones.

They’ve also raised enough capital to operate for at least 6–8 quarters without needing to raise again.

And, most importantly, they’ve already shipped product.

What We Love About This Setup

Let’s summarize why this is one of the most exciting speculative setups we’ve seen in 2025:

✅ It’s attacking a critical bottleneck in AI data centers that almost no one is paying attention to

✅ It’s doing it with a proven, patented integration platform that slashes cost and complexity

✅ It’s already won design partners across Asia, Europe, and North America

✅ It has potential exposure to the AI supply chain through multiple vendors targeting optical modules

✅ Secured significant multi-million-dollar financing, quietly taken down by a single long-term investor with deep conviction

✅ And yet, it’s still a sub-$1B company with no sell-side coverage and limited institutional ownership

That’s what asymmetric looks like.

You’re betting pennies on the chance they’ve built something the giants need but can’t replicate.

Why We’re Watching… Not Buying (Yet)

I was ready to issue a buy recommendation to capitalize on the earlier momentum I described above.

But after that surge, and the inevitable pullback, we’re pausing.

Not because the thesis changed. If anything, we’re more bullish than ever.

But the setup isn’t as clean. The stock is hanging around short-term support. RSI is fading. Volume is drifting lower. It could be a reset… or a deeper retest.

So we’re holding our fire.

Not forever. Just for now.

If support holds and it consolidates, we’ll be back.

If it drops or retests the offering levels we’ve been tracking, we’ll be even more excited to step in.

And if it rips on a contract win, well, we may buy higher, but with more conviction.

That’s the game.

And with the multi-bagger setup I believe we have here, we don’t need to be early.

What Happens Next

Over the coming days and weeks, we’ll be watching for:

Confirmation of purchase orders from any of the named (or unnamed) partners

Volume manufacturing news from their new facility

Any follow-up from hyperscaler vendors adopting their modules

Follow-through from strategic investors or new institutional buying

If any of those triggers fire, we’ll move quickly.

And when we do, we’ll reveal the name to Premium Members, but I do have some bad news.

The price to join Premium is about to rise from $15 to $25/month, and from $150 to $250/year.

But…

When you join today before the increase, you’ll lock in the current rate for life, just like every other Premium Member.

And I urge you to do it now because, not only will you get grandfathered in and lock in the current price for as long as you want, but also…

Because this might be the only photon-layer AI play that hasn’t already gone vertical.

And when it does…

We’ll be ready.

Stay tuned,

Double D

P.S. Below is the message I sent to subscribers on Wednesday:

My research team just zeroed in on a small-cap AI stock with enormous asymmetric upside.

It’s the kind of moonshot I love: speculative, under-the-radar, and capable of moving the needle on your financial life.

While we finalize the research, let’s talk about what’s already happened…

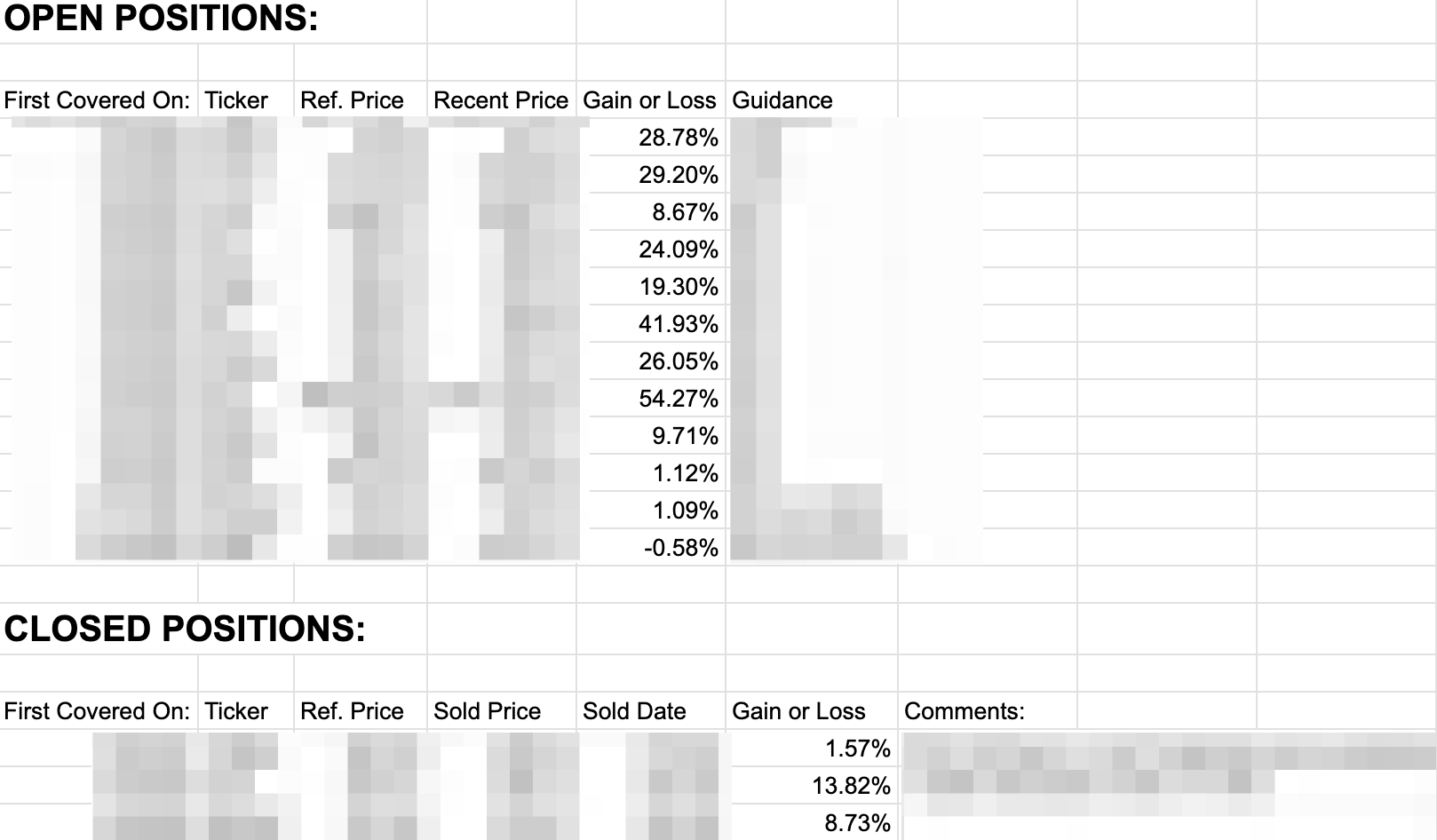

You may have seen what’s been happening inside the Moonshot Minute portfolio.

Position after position has quietly moved up. Some are up 20%… 30%… even 50%+.

Here’s the live snapshot as of today. I’ve blurred the names, of course, but the gains speak for themselves:

These weren’t longshots. They weren’t memes. And they definitely weren’t obvious. Some of the biggest winners actually triggered hate mail early on… but nobody’s complaining now.

Every one of these plays was sent to Premium readers before the breakout.

If you’ve been following the free edition, you’ve had a front-row seat but no upside.

That ends now.

The price to join Premium is about to rise from $15 to $25/month, and from $150 to $250/year.

But here’s the good news:

When you join before the increase, you’ll lock in the current rate for life, just like every other Premium Member.

No matter how much value I pack in going forward, your price stays the same.

Because the results are real. The edge is real. And the window to get in before the price changes is closing fast.

As long as you decide to join before the price goes up, and you maintain your Premium membership, you’ll never pay more.

You’ve seen what’s possible.

Now you decide: do you want to keep watching, or finally start compounding?

Let’s go,

Double D

P.S. The price increase will hit as soon as I release the small-cap AI recommendation, likely in the next few days, no later than next week. Once it’s live, the window closes.